Posts Tagged ‘Uncertainty’

When Can More Information Lead to Worse Decisions?

Among the sacred cows of Western culture is the notion that the more information and knowledge we have, the better decisions we’ll make. I’m a subscriber to this notion too; after all, I’m in the education and research business! Most of us have been rewarded throughout our lives for knowing things, for being informed. Possessing more information understandably makes us more confident about our decisions and predictions. There also is good experimental evidence that we dislike having to make decisions in situations where we lack information, and we dislike it even more if we’re up against an opponent who knows more.

Nevertheless, our intuitions about the disadvantages of ignorance can lead us astray in important ways. Not all information is worth having, and there are situations where “more is worse.” I’m not going to bother with the obvious cases, such as information that paralyses us emotionally, disinformation, or sheer information overload. Instead, I’d like to stay with situations where there isn’t excessive information, we’re quite capable of processing it and acting on it, and its content is valid. Those are the conditions that can trip us up in sneaky ways.

An intriguing 2007 paper by Crystal Hall, Lynn Ariss and Alexander Todorov presented an experiment in which half their participants were given statistical information (halftime score and win-lose record) about opposing basketball teams in American NBA games and asked to predict the game outcomes. The other half were given the same statistical information plus the team names. Basketball fans’ confidence in their predictions was higher when they had this additional knowledge. However, knowing the team names also caused them to under-value the statistical information, resulting in less accurate predictions. In short, the additional information distracted them away from the useful information.

Many of us believe experts make better decisions than novices in their domain of expertise because their expertise enables them to take more information into account. However, closer examination of this intuition via studies comparing expert and novice decision making reveals a counterintuitive tendency for experts to actually use less information than novices, especially under time pressure or when there is a large amount of information to sift through. Mary Omodei and her colleagues’ chapter in a 2005 book on professionals’ decision making presented evidence on this issues. They concluded that experts know which information is important and which can be ignored, whereas novices try to take it all on board and get delayed or even swamped as a consequence.

Stephan Lewandowsky and his colleagues and students found evidence that even expert knowledge isn’t always self-consistent. Again, seemingly relevant information is the culprit. Lewandowsky and Kirsner (2000) asked experienced wildfire commanders to predict the spread of simulated wildfires. There are two primary relevant variables: Wind velocity and the slope of the terrain. In general, fires tend to spread uphill and with the wind. Given a downhill wind, a sufficiently strong wind pushes the fire downhill with it, otherwise the fire spreads uphill against the wind.

But it turned out that the experts’ predictions under these circumstances depended on an additional piece of information. If it was a wildfire to be brought under control, experts expected it to spread downhill with the wind. If an identical fire was presented as a back burn (i.e., lit by the fire-fighters themselves) experts predicted the reverse, that the fire would spread uphill against the wind. Of course, this is ridiculous: The fire doesn’t know who lit it. Lewandowsky’s group reproduced this phenomenon in the lab and named it knowledge partitioning, whereby people learn two opposing conclusions from the same data, each triggered by an irrelevant contextual cue that they mistake for additional knowledge.

Still, knowing more increases the chances you’ll make the right choices, right? About 15 years ago Peter Ayton, an English professor visiting a Turkish university, had the distinctly odd idea of getting Turkish students to predict 32 English FA cup third-round match winners. After all, the Turkish students knew very little about English soccer. To his surprise, not only did the Turkish students do better than chance (63%), they did about as well as a much better-informed sample of English students (66%).

How did the Turkish students manage it? They were using what has come to be called the recognition heuristic: If they recognized one team name or its city of origin but not the other, in 95% of the cases they predicted the recognized team would win. If they recognized both teams, some of them applied what they knew about the teams to decide between them. Otherwise, they guessed.

So, how could the recognition heuristic do better than chance? The teams or team cities that the Turkish students recognized were more likely than the other teams to appear in sporting news because they were the more successful teams. So the more successful the team, the more likely it would be one of those recognized by the Turkish students. In other words, the recognition cue was strongly correlated with the FA match outcome.

Many of the more knowledgeable English students, on the other hand, recognized all of the teams. They couldn’t use a recognition cue but instead had to rely on knowledge cues, things they knew about the teams. How could the recognition cue do as well as the knowledge-based cues? An obvious possible explanation is that the recognition cue was more strongly correlated with the FA match outcomes than the knowledge cues were. This was the favored explanation for some time, and I’ll return to it shortly.

In two classic papers (1999 and 2002) Dan Goldstein and Gerd Gigerenzer presented several empirical demonstrations like Ayton’s. For instance, a sample of American undergraduates did about as well (71.4% average accuracy) at picking which of two German cities has the larger population as they did at choosing between pairs of American cities (71.1% average accuracy), despite knowing much more about the latter.

It gets worse. An earlier study by Hoffrage in his 1995 PhD dissertation had found that a sample of German students actually did better on this task with American than German cities. Goldstein and Gigerenzer also reported that about two thirds of an American sample responded correctly when asked which of two cities, San Diego or San Antonio, is the largest whereas 100% of a German sample got it right. Only about a third of the Germans recognized San Antonio. So not only is it possible for less knowledgeable people to do about as well as their more knowledgeable counterparts in decisions such as these, they may even do better. The phenomenon of more ignorant people outperforming more knowledgeable ones on decisions such as which of two cities is the more populous became known as the “less-is-more” effect.

And it can get even worse than that. A 2007 paper by Tim Pleskac produced simulation studies showing that it is possible for imperfect recognition to produce a less-is-more effect as well. So an ignoramus with fallible recognition memory could outperform a know-it-all with perfect memory.

For those of us who believe that more information is required for better decisions, the less-is-more effect is downright perturbing. Understandably, it has generated a cottage-industry of research and debate, mainly devoted to two questions: To what extent does it occur and under what conditions could it occur?

I became interested in the second question when I first read the Goldstein-Gigerenzer paper. One of their chief claims, backed by a mathematical proof by Goldstein, was that if the recognition cue is more strongly correlated than the knowledge cues with the outcome variable (e.g., population of a city) then the less-is-more effect will occur. This claim and the proof were prefaced with an assumption that the recognition cue correlation remains constant no matter how many cities are recognized.

What if this assumption is relaxed? My curiosity was piqued because I’d found that the assumption often was false (other researchers have confirmed this). When it was false, I could find examples of a less-is-more effect even when the recognition cue correlation was less than that of the knowledge cue. How could the recognition cue be outperforming the knowledge cue when it’s a worse cue?

In August 2009 I was visiting Oxford to work with two colleagues there, and through their generosity I was housed at Corpus Christi College. During the quiet nights in my room I tunnelled my way toward understanding how the less-is-more effect works. In a nutshell, here’s the main part of what I found (those who want the whole story in all its gory technicalities can find it here).

We’ll compare an ignoramus (someone who recognizes only some of the cities) with a know-it-all who recognizes all of them. Let’s assume both are using the same knowledge cues about the cities they recognize in common. There are three kinds of comparison pairs: Both cities are recognized by the ignoramus, only one is recognized, and neither is recognized.

In the first kind the ignoramus and know-it-all perform equally well because they’re using the same knowledge cues. In the second kind the ignoramus uses the recognition cue whereas the know-it-all uses the knowledge cues. In the third kind the ignoramus flips a coin whereas the know-it-all uses the knowledge cues. Assuming that the knowledge-cue accuracy for these pairs is higher than coin-flipping, the know-it-all will outperform the ignoramus in comparisons between unrecognized cities. Therefore, the only kind of comparison where the ignoramus can outperform the know-it-all is recognized vs unrecognized cities. This is where the recognition cue has to beat the knowledge cues, and it has to do so by a margin sufficient to make up for the coin-flip-vs-knowledge cue deficit.

It turns out that, in principle, the recognition cue can be so much better than the knowledge cues in comparisons between recognized and unrecognized cities that we get a less-is-more effect even though, overall, the recognition cue is worse than the knowledge cues. But could this happen in real life? Or is it so rare that you’d be unlikely to ever encounter it? Well, my simulation studies suggest that it may not be rare, and at least one researcher has informally communicated empirical evidence of its occurrence.

Taking into account all of the evidence thus far (which is much more than I’ve covered here), the less-is-more effect can occur even when the recognition cue is not, on average, as good as knowledge cues. Mind you, the requisite conditions don’t arise so often as to justify mass insurrection among students or abject surrender by their teachers. Knowing more still is the best bet. Nevertheless, we have here some sobering lessons for those of us who think that more information or knowledge is an unalloyed good. It ain’t necessarily so.

To close off, here’s a teaser for you math freaks out there. One of the results in my paper is that the order in which we learn to recognize the elements in a finite set (be it soccer teams, cities,…) influences how well the recognition cue will work. For every such set there is at least one ordering of the items that will maximize the average performance of this cue as we learn the items one by one. There may be an algorithm for finding this order, but so far I haven’t figured it out. Any takers?

Disappearing Truths or Vanishing Illusions?

Jonah Lehrer, in his provocative article in the New York Times last month (December 13th), drew together an impressive array of scientists, researchers and expert commentators remarking on a puzzling phenomenon. Many empirically established scientific truths apparently become less certain over time. What initially appear to be striking or even robust research findings seem to become diluted by failures to replicate them, dwindling effect-sizes even in successful replications, and/or the appearance of outright counter-examples. I won’t repeat much of Lehrer’s material here; I commend his article for its clarity and scope, even if it may be over-stating the case according to some.

Instead, I’d like to add what I hope is some helpful commentary and additional candidate explanations. Lehrer makes the following general claim:

“Most of the time, scientists know what results they want, and that can influence the results they get. The premise of replicability is that the scientific community can correct for these flaws. But now all sorts of well-established, multiply confirmed findings have started to look increasingly uncertain.”

His article surveys three manifestations of the dwindling-truth phenomenon:

- Failure to replicate an initially striking study

- Diminishing effect-sizes (e.g., dwindling effectiveness of therapeutic drugs)

- Diminishing effect-sizes over time in a single experiment or study

Two explanations are then advanced to account for these manifestations. The first is publication bias, and the second is regression to the mean.

Publication bias refers to a long-standing problem in research that uses statistical tests to determine whether, for instance, an experimental medical treatment group’s results differ from those of a no-treatment control group. A statistically significant difference usually is taken to mean that the experimental treatment “worked,” whereas a non-significant difference often is interpreted (mistakenly) to mean that the treatment had no effect. There is evidence in a variety of disciplines that significant findings are more likely to be accepted for publication than non-significant ones, whence “publication bias.”

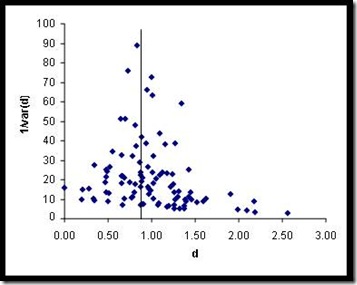

As Lehrer points out, a simple way to detect publication bias is the “funnel plot,” which is a graph of the size of an experimental effect plotted against a function of the sample size of the study (i.e., the reciprocal of the standard deviation or sometimes the reciprocal of the variance). As we move down the vertical axis, the effect-sizes should spread out symmetrically around their weighted mean if there is no publication bias. Deviations away from the mean will tend to be larger with smaller samples, but their direction does not depend on sample size. If there’s publication bias, the graph will be asymmetrical.

Here’s an example that I compiled several years ago for teaching purposes from Bond and Smith’s (1996) collection of 97 American studies of conformity using the Asch paradigm. The horizontal axis is the effect-size (Cohen’s d), which is a measure of how many standard deviations the experimental group mean differed from the control group mean. The vertical bar corresponds to the weighted average Cohen’s d (.916—an unweighted average gives 1.00). There’s little doubt that the conformity effect exists.

Nevertheless, there is some evidence of publication bias, as can be seen in the lower part of the graph where a handful of six studies with small sample sizes are skewed over to the right because their effect-sizes are the biggest of the lot. Their presence suggests that there should exist another small handful of studies skewed over to the left, perhaps with effects in the opposite direction to the conformity hypothesis. It’s fairly likely that there are some unpublished conformity studies that failed to achieve significant results or, more worryingly, came out with “negative” results. Publication bias also suggests that the average effect-size is being slightly over-estimated because of the biased collection of studies.

How could any of this account for a decline in effect-sizes over time? Well, here’s another twist to publication bias that wasn’t mentioned in Lehrer’s article and may have been overlooked by his sources as well. Studies further in the past tend to have been based on smaller samples than more recent studies. This is due, among other things, to increased computing power, ease and speed of gathering data, and the sheer numbers of active researchers working in teams.

Bigger samples have greater statistical power to detect smaller effects than smaller samples do. Given large enough samples, even trivially tiny effects become statistically significant. Tiny though they may be, significant effects are publishable findings. Ceteris paribus, larger-sample studies are less susceptible to publication bias arising from the failure to obtain significant findings. They’ll identify the same large effects their predecessors did (if the effects really are large) but they’ll also identify smaller effects that were missed by the earlier smaller-sample studies. Therefore, it is entirely likely that more recent studies, on average with larger sample sizes, will show on average smaller effects.

Let’s put this to an impromptu test with my compilation from Bond and Smith. The next two graphs show cumulative averages of sample size and effect size over time. These suggest some support for my hypothesis. Average sample sizes did increase from the mid-50’s to the mid-60’s, after which they levelled out. This increase corresponds to a decrease in (unweighted) average effect-size over the same period. But then there’s a hiccup, a jump upwards from 1965 to 1966. The reason for this is publication in 1966 of two studies with the largest effect-sizes (and small samples, N = 12 in each). It then takes several more years for the effect of those two studies to be washed out. Three of the other “deviant” top six effect-size studies were published in 1956-7 and the remaining one in 1970.

Now what about regression to the mean? Briefly, consider instructors or anyone else who rewards good performance and punishes poor performance. They will observe that the highest performers on one occasion (say, an exam) generally do not do as well on the second (despite having been rewarded) whereas the poorest performers generally do not do as badly on the second occasion (which the instructor may erroneously attribute to their having been punished). The upshot? Punishment appears to be more effective than reward.

However, these effects are not attributable to punishment being more effective than reward (indeed, a vast literature on behaviour modification techniques indicates the converse is true!). It is simply due to the fact that students’ performance on exams is not perfectly correlated, even in the same subject. Some of the good (poor) performers on the first exam had good (bad) luck. Next time around they regress back toward the class average, where they belong.

Note that in order for regression to the mean to contribute to an apparent dwindling-truth phenomenon, it has to operate in conjunction with publication bias. For example, it could account for the declining trend in my effect-size graph after the 1965-6 hiccup. Nevertheless, considerations of regression to the mean for explaining the dwindling-truth phenomena probably haven’t gone quite far enough.

Seemingly the most puzzling manifestation of the dwindling-truth phenomenon is the diminution of an effect over the course of several studies performed by the same investigators or even during a single study, as in Jonathan Schooler’s attempts to replicate one of J.B. Rhine’s precognition experiments. Schooler claims to have seen numerous data-sets where regression to the mean does not account for the decline effect. If true, this can’t be accounted for by significant-effect-only publication bias. Some other selectivity bias must be operating. My chief suspect lies in the decision a scientist makes about whether or not to replicate a study, or whether to keep on gathering more data.

Would a scientist bother conducting a second experiment to replicate an initial one that failed to find any effect? Probably not. Would Schooler have bothered gathering as much data if his initial results hadn’t shown any precognition effect? Perhaps not. Or he may not have persisted as long as he did if his results were not positive in the short term. There are principled (Bayesian) methods for deciding when one has gathered “enough” data but most scientists don’t use them. Instead, we usually fix a sample size target in advance or use our own judgment in deciding when to stop. Having done one study, we’re more likely to attempt to replicate it if we’ve found the effect we were looking for or if we’ve discovered some new effect. This is a particular manifestation of what is known in the psychological literature as confirmation bias.

Why is this important? Let’s consider scientific replications generally. Would researchers be motivated to replicate a first-ever study that failed to find a significant effect for a new therapeutic drug? Probably not. Instead, it’s the study that shouts “Here’s a new wonder drug!” that begs to be replicated. After all, checking whether a therapeutic effect can be replicated fits squarely in the spirit of scientific skepticism and impartiality. Or does it? True impartiality would require also replicating “dud” studies such as a clinical trial of a candidate HIV vaccine that has failed to find evidence of its effectiveness.

In short, we have positive-finding replication bias: It is likely that scientists suffer from a bias in favor of replicating only those studies with statistically significant findings. This is just like rewarding only the top performers on an exam and then paying attention only to their subsequent performance. It invites dwindling effects and failures of replication due to regression to the mean. If scientists replicated only those studies that had null findings, then we would see regression to the mean in the opposite direction, i.e., the emergence of effects where none had previously been found.

In Schooler’s situation there’s a related regression-to-the-mean pitfall awaiting even the researcher who gathers data with no preconceptions. Suppose we gather a large sample of data, randomly split it in half, and discover some statistically significant patterns or effects in the first half. Aha! New findings! But some of these are real and others are due to chance. Now we turn to the second half of our data and test for the same patterns on the second half only. Some will still be there but others won’t be, and on average the strength of our findings will be lessened. Statisticians refer to this as model inflation. What we’ve omitted to do is search for patterns in the second half of the data that we didn’t discover in the first half. Model inflation will happen time and time again to scientists when they discover new effects or patterns, despite the fact that their initial conclusions appear statistically solid.

Thus, we have regression to the mean working hand-in-glove with positive-finding replication bias, significance-only publication bias, and increased sample sizes over time, all militating in the same direction. Perhaps it’s no wonder that we’re observing dwindling-truth phenomena that appears to defy the law of averages.

Are there any remedies? To begin with, understanding the ways in which human biases and regression to the mean act in concert gives us the wherewithal to face the problem head-on. Publication bias and model inflation are well-known but often overlooked (especially the latter). I’ve added two speculative but plausible conjectures here (the larger sample-size over time and replication-bias effects) that merit further investigation. It remains to be seen whether the mysterious dwindling-truth phenomena can be accounted for by these four factors. I suspect there may be other causes yet to be detected.

Several remedies for publication bias have been suggested (e.g., Ioannidis 2005), including larger sample sizes, enhanced data-registration protocols, and more attention to false-positive versus false-negative rates for research designs. Matters then may hinge on whether scientific communities can muster the political will to provide incentives for counteracting our all-too-human publication and replication biases.

Trust, Gullibility and Skepticism

In my first post on this blog, I claimed that certain ignorance arrangements are socially mandated and underpin some forms of social capital. One of these is trust. Trust lives next-door to gullibility but also has faith, agnosticism, skepticism and paranoia as neighbors. I want to take a brief tour through this neighborhood.

Trust enhances our lives in ways that are easy to take for granted. Consider the vast realm of stuff most of us “just know,” such as our planet is round, our biological parents really are our biological parents, and we actually can digest protein but can’t digest cellulose. These are all things we’ve learned essentially third-hand, from trusted sources. We don’t have direct evidence for these ideas. Hardly any of us would be able to offer proofs or even solid evidence for the bulk of our commonsense knowledge and ideas about how the world works. Instead, we trust the sources, in some cases because of their authority (e.g., parents, teachers) and in others because of their sheer numbers (e.g., everyone we know and everything we read agrees that the planet is round). Those sources, in turn, rarely are people who actually tested commonsense first-hand.

Why is this trust-based network of beliefs generally good for us? Most obviously, it saves us time, effort and disruption. Skepticism is costly, and not just in terms of time and energy (imagine the personal and social cost of demanding DNA tests from your parents to prove that you really are their offspring). Perhaps a bit less obviously, it conveys trust from us to those who provided the information in the first place. As a teacher, I value the trust my students place in me. Without it, teaching would be very difficult indeed.

And even less obviously, it’s this trust that makes learning and the accumulation of knowledge possible. It means each of us doesn’t have to start from the beginning again. We can build on knowledge that we trust has been tested and verified by competent people before us. Imagine having to fully test your computer to make sure it does division correctly, and spare a thought for those number-crunchers among us who lived through the floating-point-divide bug in the Intel P5 chip.

More prosaically, about 10 years ago I found myself facing an awkward pair of possibilities. Either (a) Sample estimates of certain statistical parameters don’t become more precise with bigger random samples (i.e., all the textbooks are wrong), or (b) There’s a bug in a very popular stats package that’s been around for more than 3 decades. Of course, after due diligence I found the bug. “Eternal vigilance!” A colleague crowed when I described to him the time and trouble it took, not only to diagnose the bug but to convince the stats package company that their incomplete beta function algorithm was haywire. But there’s the rub: If eternal vigilance really were required then none of us would make any advances at all.

Back to trust—When does it cease to be trust and becomes gullibility? There are the costs of trying to check out all those unsubstantiated clichés, and we also suffer from confirmation bias. Both tendencies make us vulnerable to gullibility.

Gullibility often is taken to mean “easily deceived.” Closer to the mark would be “excessively trusting,” but that begs the question of what is “excessive.” Can gullibility be measured? Perhaps, but one way not to do so is via the various “gullibility tests” abounding on the web. A fairly typical example is this one, in which you can attain a high score as a “free thinker” simply by choosing answers that go against conventional beliefs or that incline towards conspiracy theories. However, distrust is no evidence for lack of gullibility. In fact, extreme distrust and paranoia are as obdurate against counter-evidence as any purblind belief; they become a kind of gullibility as well.

Can gullibility be distinguished from trust? Yes, according to studies such as the Psychological Science study claiming that Oxytocin makes people more trusting but not more gullible (see the MSN story on this study). Participants received either a placebo or an oxytocin nasal spray, and were then asked to play a trust game in which they received a certain amount of money they could share with a “trustee,” in whose hands the money would then triple. The trustee she could then transfer all, part, or none of it back to the participant. So the participant could make a lot of money, but only if the trustee was reliable and fair.

Participants played multiple rounds of the trust game with a computer and virtual partners, half of whom appeared to be reliable and half unreliable. Oxytocin recipients offered more money to the computer and the reliable partners than placebo recipients did. However, oxytocin and placebo recipients were equally reluctant to share money with unreliable partners. So, gullibility may be a failure to read social cues.

It may comfort to know that clever people can be gullible too. After all, academic intelligence is no guarantee of social or practical intelligence. The popular science magazine Discover ran an April Fool’s article in 1995 on the hotheaded naked ice borer, which attracted more mail than any previous article published therein. It was widely distributed by newswires and treated seriously in Ripley’s Believe It or Not! and The Unofficial X Files Companion. While the author of this and other hoaxes expressed a few pangs of guilt, none of this inhibited Discover’s blog site from carrying this piece on the “discovery” that gullibility is associated with the “inferior supra-credulus,” a region in the brain “unsually active in people with a tendency to believe horoscopes and papers invoking fancy brain scans,” and a single gene called WTF1. As many magicians and con artists have known, people who believe they are intelligent also often believe they can’t be fooled and, as a result, can be caught off-guard. A famous recent case is Stephen Greenspan, professor and author of a book on gullibility, who became a victim of Bernie Madoff. At the other end of the scale is comedian Joe Penner’s catchphrase “You can’t fool me, I’m too ignorant.”

OK, but what about agnosticism? Why not avoid gullibility by refusing to believe any proposition for which you lack first-hand evidence or logico-mathematical proof? This sounds attractive at first, but the price of agnosticism is indecisiveness. Indecision can be costly (should you refuse your food because you don’t understand digestion?). Even if you try to evade indecision by tossing a coin you can still have accountability issues because, by most definitions, a decision is a deliberate choice of the most desirable option. Carried far enough, a radical agnostic would be unable to commit to important relationships, because those require trust.

So now let’s turn the tables. We place trust in much of our third-hand stock of “knowledge” because the alternatives are too costly. But our trust-based relationships actually require that we forego complete first-hand knowledge about those whom we trust. It is true that we usually trust only people who we know very well. Nevertheless, the fact remains that people who trust one another don’t monitor one another all the time, nor do they hold each other constantly accountable for every move they make. A hallmark of trust is allowing the trusted party privacy, room to move, and discretion.

Imagine that you’re a tenured professor at a university with a mix of undergraduate teaching, supervision of several PhD students, a research program funded by a National Science Foundation grant, a few outside consultancies each year, and the usual administrative committee duties. You’ve worked at your university for a dozen years and have undergone summary biannual performance evaluations. You’ve never had an unsatisfactory rating in these evaluations, you have a solid research record, and in fact you were promoted three years ago.

One day, your department head calls you into her office and tells you that there’s been a change in policy. The university now demands greater accountability from its academic staff. From now on, she will be monitoring your activities on a weekly basis. You will have to keep a log of how you spend each week on the job, describing your weekly teaching, supervision, research, and administrative efforts. Every cent you spend from your grant will be examined by her, and she will inspect the contents of your lectures, exams, and classroom assignments. Your web-browsing and emailing at work also will be monitored. You will be required to clock in and out of work to document how much time you spend on campus.

What effect would this have on you? Among other things, you would probably think you were distrusted by your department head. But why? she might ask. After all, this is purely an information-gathering exercise. You aren’t being evaluated. Moreover, it’s nothing personal; all the other academics are going to be monitored in the same way.

Most of us would still think that we weren’t trusted anymore. Intensive surveillance of that kind doesn’t fit with norms for trust behavior. It doesn’t seem plausible that all that effort invested monitoring your activities is “purely” for information gathering. Surely the information is going to be used for evaluation, or at the very least it could be used for such purposes in the future. Who besides your department head may have access to this information? And so on…

Chances are this would also stir up some powerful emotions. A 1996 paper by sociologists Fine and Holyfield observed that we don’t just think trust, we feel trust (pg. 25). By the same token, feeling entrusted by others is crucial for our self-esteem. Treatment of the kind described above would feel belittling, insulting, perhaps even humiliating. At first glance, the contract-like assurance of this kind of performance accountability seems to be sound managerial practice. However, it has hidden costs: Distrusted employees make disaffected and insulted employees who are less likely to be cooperative, volunteering, responsible or loyal—All qualities that spring from feeling entrusted.

Privacy, Censorship, Ignorance and the Internet

Wikileaks releases hundreds of thousands more classified US documents this weekend, and my wife and I have recently boogied our way through the American airport “naked scan” process (try googling ‘naked scan youtube’ to get a sample of public backlash against it). So, I have both censorship and privacy on my mind. They belong together. Concerns about privacy and censorship regarding the internet have been debated for more than a decade. All of these concerns are about who should have access to what and about whom, and whether regulation of such access is feasible.

Attempts to censor internet materials have largely been futile. In Australia (where I live) efforts to draft legislation requiring internet service providers to filter content have stalled for more than two years. Indeed, the net has undermined censorship powers over earlier mass communications media such as television. On grounds that it could prejudice criminal trials at the time, censorship authorities in the state of Victoria attempted to prevent its residents from watching “Underbelly,” a TV series devoted to gangland wars centered in Melbourne. They discovered to their chagrin that pirated versions of the program could be downloaded from various sources on the net.

How about privacy? Recently Facebook has been taken to task over privacy issues, and not unreasonably, although both Facebook and its users have contributed to those problems. On the users’ side, anyone can be tagged in a personally invasive or offensive photo and before Facebook can remove the photo it may already have been widely distributed or shared. Conventional law does not protect people who are captured by a photograph in public because that doesn’t constitute an invasion of privacy. On Facebook’s part, in 2007 it the Beacon program was launched whereby user rental records were released in public. Many people regarded this as a breach of privacy, and a lawsuit ensued, resulting in the shutdown of Beacon.

And then there was Kate’s party. Unbeknown to Kate Miller, an invitation to a party at her apartment was sent out on facebook. After prankster David Thorne posted the link on Twitter, people started RSVP’ing. After just one night, when it was taken down by Facebook, 60,000 people had said they were coming with a further 180,000 unconfirmed invitees. According to Thorne, this hoax was motivated by a desire to point out problems with privacy on Facebook and Twitter.

A cluster of concerns boils down to a dual use dilemma of the kind I described in an earlier post. The same characteristics of the net that defeat secrecy or censorship and democratize self-expression also can be used to invade privacy, steal identities, and pirate intellectual property or copyright material. For example, cookies are a common concern in the field of privacy, especially tracking cookies. Although most website developers use cookies for legitimate technical purposes, the potential for abuse is there. General concerns regarding Internet user privacy have become sufficient for a UN agency to issue a report on the dangers of identity fraud.

The chief dividing-line in debates about privacy and censorship is whether privacy is an absolute right or a negotiable privilege. Security experty Bruce Schneier’s 2006 essay on privacy weighs in on the side of privacy as a right. He points out that anti-privacy arguments such as “if you’re doing nothing wrong you have nothing to hide” assume that privacy is in the service of concealing wrongs. But love-making, diary-keeping, and intimate conversations are not examples of wrongdoings, and they indicate that privacy is a basic need.

Contrast this view with Google CEO Eric Schmidt’s vision of the future, in which children change their names at adulthood to escape embarrassing online dossiers of the kind compiled by Google. In a 2010 interview with him, Wall Street Journal columnist Holman Jenkins, Jr. records Mr. Schmidt predicting, “apparently seriously, that every young person one day will be entitled automatically to change his or her name on reaching adulthood in order to disown youthful hijinks stored on their friends’ social media sites.” Mr. Schmidt goes on to opine that regulation of privacy isn’t needed because users right will abandon Google if it does anything “creepy” with their personal information. Among the more amusing comments posted in response, one respondent noted that Mr. Schmidt has blocked the Google Street-View images of his own holiday home on Nantucket.

Back to Wall Street Journal columnist Jenkins’ interview with the Google CEO: “Mr. Schmidt is surely right, though, that the questions go far beyond Google. ‘I don’t believe society understands what happens when everything is available, knowable and recorded by everyone all the time,’ he says.” This goes to the heart of the matter, and Joe and Jane Public aren’t the only ones who don’t understand this.

Theories and research about human communication have largely been hobbled by a default assumption is that miscommunication or misunderstanding is aberrant and should be eliminated. For example, the overwhelming emphasis is on studying how to detect deception rather than investigating how it is constituted and the important roles it plays in social interaction. Likewise, the literature on miscommunication is redolent in negative metaphors, with mechanistic terms like “breakdowns,” “disrepair;” and “distortion;” critical-theoretic terms such as “disadvantage,” “denial,” and “oppression.” In the human relations school “unshared” and “closed” communications carry with them moral opprobrium. So these perspectives are blind to the benefits of unshared communication such as privacy.

Some experts from other domains concur. Steve Rambam famously declares that “Privacy is dead. Get over it.” David Brin claims (in his rebuttal to a critique of his book, The Transparent Society) that “we already live in the openness experiment, and have for 200 years.” The implicit inference from all this is that if only we communicated fully and honestly with one another, all would go well.

Really?

Let’s cut to the chase. Imagine that all of us—ZAP!—are suddenly granted telepathy. Each of us has instant access to the innermost thoughts and feelings of our nearest and dearest, our bosses, subordinates, friends, neighbors, acquaintances and perfect strangers. The ideal of noise-free, transparent, totally honest communication finally is achieved. Forget the internet—Now there really is no such thing as privacy anymore. What would the consequences be?

In the short term, cataclysmic. Many personal relationships, organizations, governments, and international relations would fall (or be torn) apart. There would be some pleasant surprises, yes, but I claim there would be many more negative ones, for two reasons. First, we have a nearly universal tendency to self-bolster by deluding ourselves somewhat about how positively others regard us. Second, many of us would be outraged at finding out how extensively we’ve been hoodwinked by others, not just those with power over us but also our friends and allies. Look at who American governmental spokespeople rushed to forewarn and preempt about the Wikileaks release. It wasn’t their enemies. It was their friends.

What about the longer term? With the masks torn off and the same information about anyone’s thoughts available to everyone, would we all end up on equal footing? As Schneier pointed out in his 2008 critique of David Brin’s book, those who could hang onto positions of power would find their power greatly enhanced. Knowledge may be power, but it is a greater power for those with more resources to exploit it. It would be child’s play to detect any heretic failing to toe the party or corporate line. And the kind of targeted marketing ensuing from this would make today’s cookie-tracking efforts look like the fumbling in the dark that they are.

In all but the most benign social settings, there would be no such thing as “free thinking.” Yes, censorship and secrecy would perish, but so would privacy and therefore the refuge so poignantly captured by Die Geganken Sind Frei. The end result would certainly not be universal freedom of thought or expression.

Basic kinds of social capital would be obliterated and there would be massive cultural upheavals. Lying would become impossible, but so would politeness, tact, and civility. These all rely on potentially face-threatening utterances being softened, distorted, made ambiguous, or simply unsaid. Live pretence, play-acting or role-playing of any kind would be impossible. So would most personnel management methods. The doctor’s “bedside manner” or any professional’s mien would become untenable. For example, live classroom teaching would be very difficult indeed (“Pay attention, Jones, and stop fantasizing about Laura in the third row.” “I will, sir, when you stop fantasizing about her too.”). There would be no personas anymore, only personalities.

I said earlier that privacy and censorship belong together. As long as we want one, we’ll have to live with the other (and I leave it to you to decide which is the “one” and which the “other”). The question of who should know what about what or whom is a vexed question, and so it should be for anyone with a reasonably sophisticated understanding of the issues involved. And who should settle this question is an even more vexed question. I’ve raised only a few of the tradeoffs and dilemmas here. The fact that internet developments have generated debates about these issues is welcome. But frankly, those debates have barely scratched the surface (and that goes for this post!). Let’s hope they continue. We need them.

How can Vagueness Earn Its Keep?

Late in my childhood I discovered that if I was vague enough about my opinions no-one could prove me wrong. It was a short step to realizing that if I was totally vague I’d never be wrong. What is the load-bearing capacity of the elevator in my building? If I say “anywhere from 0 tons to infinity” then I can’t be wrong. What’s the probability that Samantha Stosur will win a Grand Slam tennis title next year? If I say “anywhere from 0 to 1” then once again I can’t be wrong.

There is at least one downside to vagueness, though: Indecision. Vagueness can be paralyzing. If the elevator’s designers’ estimate of its load-bearing capacity had been 0-to-infinity then no-one could decide whether any load should be lifted by it. If I can’t be more precise than 0-to-1 about Stosur’s chances of winning a Grand Slam title then I have no good reason to bet on or against that occurrence, no matter what odds the bookmakers offer me.

Nevertheless, in situations where we don’t know much but are called upon to estimate something, some amount of vagueness seems not only natural but also appropriately cautious. You don’t have to look hard to find vagueness; you can find it anywhere from politics to measurement. When you weigh yourself, your scale may only determine your weight within plus or minus half a pound. Even the most well-informed engineer’s estimate of load-bearing capacity will have an interval around it. The amount of imprecision in these cases is determined by what we know about the limitations of our measuring instruments or our mathematical models of load-bearing structures.

How would a sensible decision maker work with vague estimates? Suppose our elevator specs say the load-bearing capacity is between 3800 and 4200 pounds. Our “worst-case” estimate is 3800. We would be prudent to use the elevator for loads weighing less than 3800 pounds and not for ones weighing more than that. But what if our goal was to overload the elevator? Then to be confident of overloading it, we’d want a load weighing more than 4200 pounds.

A similar prescription holds for betting on the basis of probabilities. We should use the lowest estimated probability of an event for considering bets that the event will happen and the highest estimated probability for bets that the event won’t happen. If I think the probability that Samantha Stosur will win a Grand Slam title next year is somewhere from 1/2 to 3/4 then I should accept any odds longer than an even bet that she’ll win one (i.e., I’ll use 1/2 as my betting probability on Stosur winning), and if I’m going to bet against it then I should accept any odds longer than 3 to 1 (i.e., I’ll use 1 – 3/4 = 1/4 as my betting probability against Stosur). For any odds offered to me corresponding to a probability inside my 1/2 to 3/4 interval, I don’t have any good reason to accept or reject a bet for or against Stosur.

Are there decisions where vagueness serves no distinct function? Yes: Two-alternative forced-choice decisions. A two-alternative forced choice compels the decision maker to elect one threshold to determine which alternative is chosen. Either we put a particular load into the elevator or we do not, regardless of how imprecise the load-bearing estimate is. The fact that we use our “worst-case” estimate of 3800 pounds for our decisional threshold makes our decisional behavior no different from someone whose load-bearing estimate is precisely 3800 pounds.

It’s only when we have a third “middle” option (such as not betting either way on Sam Stosur) that vagueness influences our decisional behavior distinctly from a precise estimation. Suppose Tom thinks the probability that Stosur will win a Grand Slam title is somewhere between 1/2 and 3/4 whereas Harry thinks it’s exactly 1/2. For bets on Stosur, Harry won’t accept anything shorter than even odds. For any odds shorter than that Harry will be willing to bet against Stosur. Offered any odds corresponding to a probability between 1/2 and 3/4, Tom could bet either way or even decide not to bet at all. Tom may choose the middle option (not betting at all) under those conditions, whereas Harry never does.

If this is how they always approach gambling, and if (let’s say) on average the midpoints of Tom’s probability intervals are as accurate as Harry’s precise estimates, it would seem that in the long run they’ll do about equally well. Harry might win more often than Tom but he’ll lose more often too, because Tom refuses more bets.

But is Tom missing out on anything? According to philosopher Adam Elga, he is. In his 2010 paper, “Subjective Probabilities Should Be Sharp,” Elga argues that perfectly rational agents always will have perfectly precise judged probabilities. He begins with his version of the “standard” rational agent, whose valuation is strictly monetary, and who judges the likelihood of events with precise probabilities. Then he offers two bets regarding the hypothesis (H) that it will rain tomorrow:

- Bet A: If H is true, you lose $10. Otherwise you win $15.

- Bet B: If H is true, you win $15. Otherwise you lose $10.

First he offers the bettor Bet A. Immediately after the bettor decides whether or not to accept Bet A, he offers Bet B.

Elga then declares that any perfectly rational bettor who is sequentially offered bets A and B with full knowledge in advance about the bets and no change of belief in H from one bet to the next will accept at least one of the bets. After all, accepting both of them nets the bettor $5 for sure. If Harry’s estimate of the probability of H, P(H), is less than .6 then he accepts Bet A, whereas if it is greater than .6 Harry rejects Bet A. If Harry’s P(H) is low enough he may decide that accepting Bet A and rejecting Bet B has higher expected return than accepting both bets.

On the other hand, suppose Tom’s assessment of P(H) is somewhere between 1/4 and 3/4. Bet A is optional because .6 lies in this interval, and so is Bet B. According to the rules for imprecise probabilities, Tom could (optionally) reject both bets. But rejecting both would net him $0, so he would miss out on $5 for sure. According to Elga, this example suffices to show that vague probabilities do not lead to rational behavior.

But could Tom rationally refuse both bets? He could do so if not betting, to him, is worth at least $5 regardless of whether it rains tomorrow or not. In short, Tom could be rationally vague if not betting has sufficient positive value (or if betting is sufficiently aversive) for him. So, for a rational agent, vagueness comes at a price. Moreover, the greater the vagueness, the greater value the middle option has to be in order for vagueness to earn its keep. An interval for P(H) from 2/5 to 3/5 requires the no-bet option to have value equal to $5, whereas an interval of 1/4 to 3/4 requires that option to be worth $8.75.

Are there situations like this in the real world? A very common example is medical diagnostic testing (especially in preventative medicine), where the middle option is for the patient to undergo further examination or observation. Another example is courtroom trials. There are trials in which jurors may face more than the two options of conviction or acquittal, such as mental illness or diminished responsibility. The Scottish system with its Not Proven alternative has been the subject of great controversy, due to the belief that if it gives juries a let-out from returning Guilty verdicts. Not Proven is essentially a type of acquittal. The charges against the defendant are dismissed and she or he cannot be tried again for the same crime. About one-third of all jury acquittals in Scotland are the product of a Not Proven verdict.

Can an argument be made for Not Proven? A classic paper by Terry Connolly (1987) pointed out that a typical threshold probability of guilt associated with the phrase “beyond reasonable doubt” is in the [0.9, 1] range. For a logically consistent juror a threshold probability of 0.9 implies the difference between the subjective value of acquitting versus convicting the innocent is 9 times the difference in the value of convicting versus acquitting the guilty. Connolly demonstrated that the relative valuations of the four possible outcomes (convicting the guilty, acquitting the innocent, convicting the innocent and acquitting the guilty) that are compatible with such a high threshold probability are counterintuitive. Specifically, “. . . if one does [want to have a threshold of 0.9], one must be prepared to hold the acquittal of the guilty as highly desirable, at least in comparison to the other available outcomes” (pg. 111). He also showed that more intuitively reasonable valuations lead to unacceptably low threshold probabilities.

So there seems to be a conflict between the high threshold probability required by “beyond reasonable doubt” and the relative value we place on acquitting the guilty versus convicting them. In a 2006 paper I showed that incorporating a third middle option with a suitable mid-range threshold probability can resolve this quandary, permitting a rational juror to retain a high conviction threshold and still evaluate false acquittals as negatively as false convictions. In short, a middle option like “Not Proven” would seem to be just the ticket. The price paid for this solution is a more stringent standard of proof for outright acquittal than any probability of guilt shy of .9, because Not Proven requires a standard of proof between .9 and some lower probability.

But what do people do? Two of my Honours students, Sara Deady and Lavinia Gracik, decided to find out. Sara and Lavinia both have backgrounds in law and psychology. They designed and conducted experiments in which mock jurors deliberated on realistic trial cases. In one condition the Not Proven, Guilty and Acquitted options were available to the jurors, whereas in another condition only the conventional two were available.

Their findings, published in our 2007 paper, contradicted the view that the Not Proven option attracts jurors away from returning convictions. Instead, Not Proven more often supplanted outright acquittals. Judged probabilities of guilt from jurors returning Not Proven were mid-range, just as the “rational juror” model said they should be. They acquitted defendants only if they thought the probability of guilt was quite low.

Vagueness therefore earns its keep through the value of the “middle” option that it invokes. Usually that value is determined by a tradeoff between two desirable properties. In measurement, the tradeoff often is speed vs accuracy or expense vs precision. In decisions, it’s decisiveness vs avoidance of undesirable errors. In betting or investment, cautiousness vs opportunities for high returns. And in negotiating agreements such as policies, it’s consensus vs clarity.

Are There Different Kinds of Uncertainties? Probability, Ambiguity and Conflict

The domain where I work is a crossroads connecting cognitive and social psychology, behavioral economics, management science, some aspects of applied mathematics and analytical philosophy. Some of the traffic there carries some interesting debates about the nature of uncertainty. Is it all one thing? For instance, can every version of uncertainty be reduced to some form of probability? Or are there different kinds? Was Keynes right to distinguish between “strength” and “weight” of evidence?

Why is this question important? Making decisions under uncertainty in a partially learnable world is one of the most important adaptive challenges facing humans and other species. If there are distinct (and consequential) kinds, then we may need distinct ways of coping with them. Each kind also may have its own special uses. Some kinds may be specific to an historical epoch or to a culture.

On the other hand, if there really is only one kind of uncertainty then a rational agent should have only one (consistent) method of dealing with it. Moreover, such an agent should not be distracted or influenced by any other “uncertainty” that is inconsequential.

A particular one-kind view dominated accounts of rationality in economics, psychology and related fields in the decades following World War II. The rational agent was a Bayesian probabilist, a decision-maker whose criterion for best option was the option that yielded the greatest expected return. This rational agent maximizes expected outcomes. Would you rather be given $1,000,000 right now for sure, or offered a fair coin-toss in which Heads nets you $2,400,000 and Tails leaves you nothing? The rational agent would go for the coin-toss because the expected return is 1/2 times $2,400,000, i.e., $1,200,000, which exceeds $1,000,000. A host of experimental evidence says that most of us would choose the $1M instead.

One of the Bayesian rationalist’s earliest serious challenges came from a 1961 paper by Daniel Ellsberg (Yes, that Daniel Ellsberg). Ellsberg set up some ingenious experiments demonstrating that people are influenced by another kind of uncertainty besides probability: Ambiguity. Ambiguous probabilities are imprecise (e.g., a probability we can only say is somewhere between 1/3 and 2/3). Although a few precursors such as Frank Knight (1921) had explored some implications for decision makers when probabilities aren’t precisely known, Ellsberg brought this matter to the attention of behavioral economists and cognitive psychologists. His goal was to determine whether the distinction between ambiguous and precise probabilities has any “behavioral significance.”

Here’s an example of what he meant by that phrase. Suppose you have to choose between drawing one ball from one of two urns, A or B. Each urn contains 90 balls, with the offer of a prize of $10 if the first ball drawn is either Red or Yellow. In Urn A, 30 of the balls are Red and the remaining 60 are either Black or Yellow but the number of Yellow and Black balls has been randomly selected by a computer program, and we don’t know what it is. Urn B contains 30 Red, 30 Yellow, and 30 Black balls. If you prefer Urn B, you’re manifesting an aversion to not knowing precisely how many Yellow balls there are in Urn A– i.e., ambiguity aversion. In experiments of this kind, most people choose Urn B.

But now consider a choice between drawing a ball from one of two bags, each containing a thousand balls with a number on each ball, and a prize of $1000 if your ball has the number 500 on it. In Bag A, the balls were numbered consecutively from 1 to 1000, so we know there is exactly one ball with the number 500 on it. Bag B, however, contains a thousand balls with numbers randomly chosen from 1 to 1000. If you prefer Bag B, then you may have reasoned that there is a chance of more than one ball in there with the number 500. If so, then you are demonstrating ambiguity seeking. Indeed, most people choose Bag B.

Now, Bayesian rational agents would not have a preference in either of the scenarios just described. They would find the expected return in Urns A and B to be identical, and likewise with Bags A and B. So, our responses to these scenarios indicate that we are reacting to the ambiguity of probabilities as if ambiguity is a consequential kind of uncertainty distinct from probability.

Ellsberg’s most persuasive experiment (the one many writers describe in connection with “Ellsberg’s Paradox“) is as follows. Suppose we have an urn with 90 balls, of which 30 are Red and 60 are either Black or Yellow (but the proportions of each are unknown). If asked to choose between gambles A and B as shown in the upper part of the table below (i.e., betting on Red versus betting on Black), most people prefer A.

| 30 | 60 | ||

| Urn | Red | Black | Yellow |

| A | $100 | $0 | $0 |

| B | $0 | $100 | $0 |

| 30 | 60 | ||

| Urn | Red | Black | Yellow |

| C | $100 | $0 | $100 |

| D | $0 | $100 | $100 |

However, when asked to choose between gambles C and D, most people prefer D. People preferring A to B and D to C are violating a principle of rationality (often called the “Sure-Thing Principle”) because the (C,D) pair simply adds $100 for drawing a Yellow ball to the (A,B) pair. If rational agents prefer A to B, they should also prefer C to D. Ellsberg demonstrated that ambiguity influences decisions in a way that is incompatible with standard versions of rationality.

But is it irrational to be influenced by ambiguity? It isn’t hard to find arguments for why a “reasonable” (if not rigorously rational) person would regard ambiguity as important. Greater ambiguity could imply greater variability in outcomes. Variability can have a downside. I tell students in my introductory statistics course to think about someone who can’t swim considering whether to wade across a river. All they know is that the average depth of the river is 2 feet. If the river’s depth doesn’t vary much then all is well, but great variability could be fatal. Likewise, financial advisors routinely ask clients to consider how comfortable they are with “volatile” versus “conservative” investments. As many an investor has found out the hard way, high average returns in the long run are no guarantee against ruin in the short term.

So, a prudent agent could regard ambiguity as a tell-tale marker of high variability and decide accordingly. It all comes down to whether the agent restricts their measure of good decisions to expected return (i.e., the mean) or admits a second criterion (variability) into the mix. Several models of risk behavior in humans and other animals incorporate variability. A well-known animal foraging model, the energy budget rule, predicts that an animal will prefer foraging options with smaller variance when the expected energy intake exceeds its requirements. However, it will prefer greater variance when the expected energy intake is below survival level because greater variability conveys a greater chance of obtaining the intake needed for survival.

There are some fascinating counter-arguments against all this, and counter-counter-arguments too. I’ll revisit these in a future post.

I was intrigued by Ellsberg’s paper and the literature following on from it, and I’d also read William Empson’s classic 1930 book on seven types of ambiguity. It occurred to me that some forms of ambiguity are analogous to internal conflict. We speak of being “in two minds” when we’re undecided about something, and we often simulate arguments back and forth in our own heads. So perhaps ambiguity isn’t just a marker for variability. It could indicate conflict as well. I decided to run some experiments to see whether people respond to conflicting evidence as they do to ambiguity, but also pitting conflict against ambiguity.

Imagine that you’re serving on a jury, and an important aspect of the prosecution’s case turns on the color of the vehicle seen leaving the scene of a nighttime crime. Here are two possible scenarios involving eyewitness testimony on this matter:

A) One eyewitness says the color was blue, and another says it was green.

B) Both eyewitnesses say the color was either blue or green.

Which testimony would you rather receive? In which do the eyewitnesses sound more credible? If you’re like most people, you’d choose (B) for both questions, despite the fact that from a purely informational standpoint there is no difference between them.

Evidence from this and other choice experiments in my 1999 paper suggests that in general, we are “conflict averse” in two senses:

- Conflicting messages from two equally believable sources are dispreferred in general to two informatively equivalent, ambiguous, but agreeing messages from the same sources; and

- Sharply conflicting sources are perceived as less credible than ambiguous agreeing sources.

The first effect goes further than simply deciding what we’d rather hear. It turns out that we will choose options for ourselves and others on the basis of conflict aversion. I found this in experiments asking people to make decisions about eyewitness testimony, risk messages, dieting programs, and hiring and firing employees. Nearly a decade later, Laure Cabantous (then at the Université Toulouse) confirmed my results in a 2007 paper demonstrating that insurers would charge a higher premium for insurance against mishaps whose risk information was conflictive than if the risk information was merely ambiguous.

Many of us have an intuition that if there’s equal evidence for and against a proposition, then both kinds of evidence should be accessed by all knowledgeable, impartial experts in that domain. If laboratory A produces 10 studies supporting an hypothesis (H, say) and laboratory B produces 10 studies disconfirming H, something seems fishy. The collection of 20 studies will seem more trustworthy if laboratories A and B each produce 5 studies supporting H and 5 disconfirming it, even though there are still 10 studies for and 10 studies against H. We expect experts to agree with one another, even if their overall message is vague. Being confronted with sharply disagreeing experts sets off alarms for most of us because it suggests that the experts may not be impartial.

The finding that people attribute greater credibility to agreeing-ambiguous sources than conflicting-precise sources poses strategic difficulties for the sources themselves. Communicators embroiled in a controversy face a decision equivalent to a Prisoners’ Dilemma, when considering communications strategies while knowing that other communicators hold views contrary to their own. If they “soften up” by conceding a point or two to the opposition they might win credibility points from their audience, but their opponents could play them for suckers by not conceding anything in return. On the other hand, if everyone concedes nothing then they all could lose credibility.

None of this is taken into account by the one-kind view of uncertainty. A probability “somewhere between 0 and 1” is treated identically to a probability of “exactly 1/2,” and sharply disagreeing experts are regarded as the same as ambiguous agreeing experts. But you and I know differently.

Related Articles

- Ambiguity is Another Reason to Mitigate Climate Change (wallstreetpit.com)

![clip_image002[7] clip_image002[7]](https://ignoranceanduncertainty.files.wordpress.com/2011/01/clip_image0027_thumb.gif?w=505&h=255)

![clip_image002[9] clip_image002[9]](https://ignoranceanduncertainty.files.wordpress.com/2011/01/clip_image0029_thumb.gif?w=507&h=256)